Towards Practical Deployment-Stage Backdoor Attack on Deep Neural Networks

[pdf] CVPR 2022 (oral), 2022

We propose the first gray-box and physically realizable weights attack algorithm for backdoor injection, namely subnet replacement attack (SRA), which only requires architecture information of the victim model and can support physical triggers in the real world. Extensive experimental simulations and system-level real-world attack demonstrations are conducted. Our results not only suggest the effectiveness and practicality of the proposed attack algorithm, but also reveal the practical risk of a novel type of computer virus that may widely spread and stealthily inject backdoor into DNN models in user devices. By our study, we call for more attention to the vulnerability of DNNs in the deployment stage.

Our code is publicly available at https://github.com/Unispac/Subnet-Replacement-Attack.

Understand SRA in 2 min

Subnet Replacement Attack (SRA) is a gray-box backdoor attack, which is compatible with various trigger types, and even physical-world triggers. SRA could become a novel type of stealthy computer virus, once combined with memory tampering system-level technique.

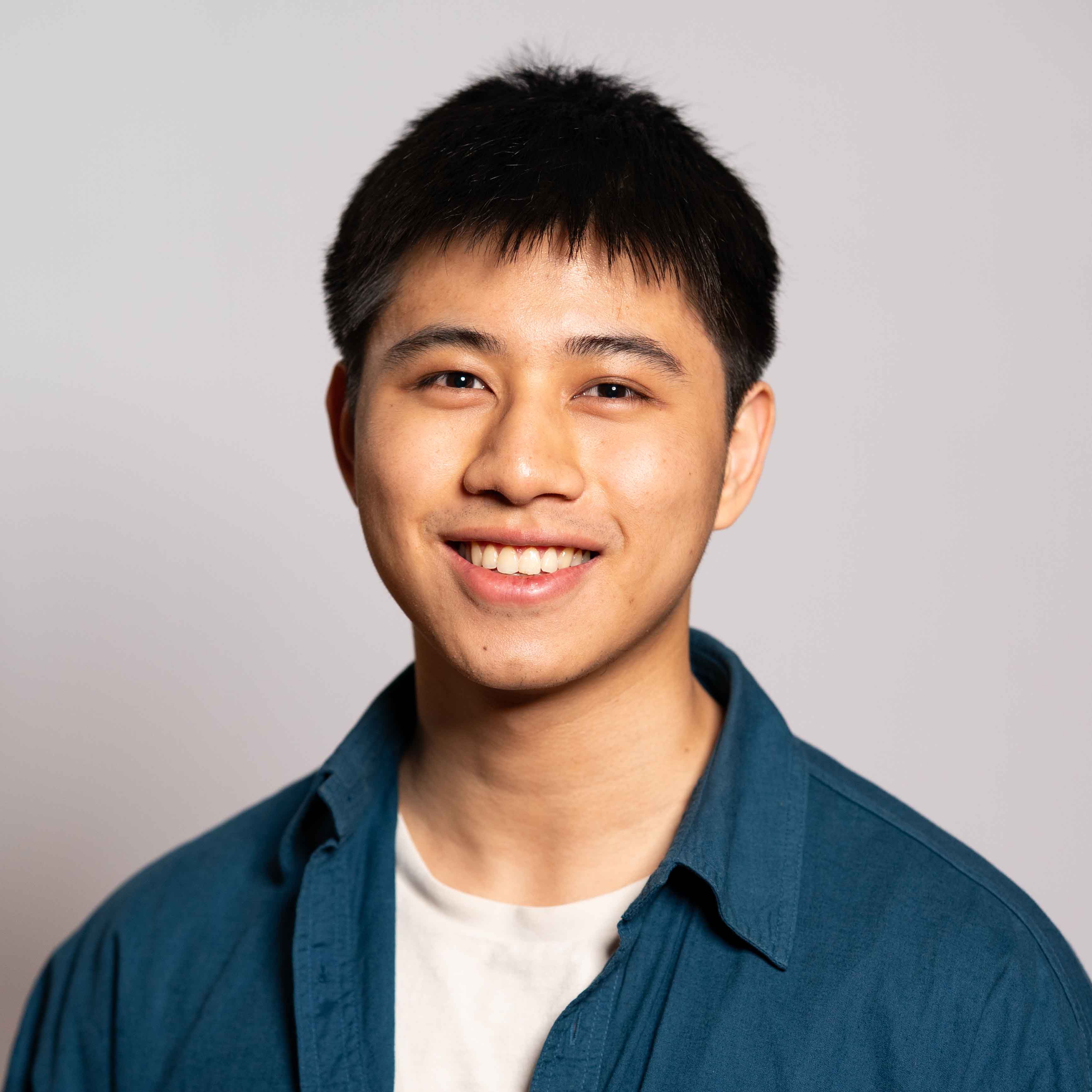

As shown above, SRA works in an embarrassingly simple way:

- All things attackers need to have are

- victim model architecture

- a small number of training data matching the scenario of the victim model

- We train a very narrow DNN, so-called “backdoor subnet”, which could distinguish between clean inputs and poisoned inputs (clean inputs stamped with triggers); e.g., it outputs 0 for clean inputs and 20 for poisoned inputs.

- Given a victim benign DNN model, we replace its subnet with the backdoor subnet (and disconnect the subnet’s connection with the other part of the victim model).

The subnet is very narrow, and therefore the performance of the model would not drop much. Meanwhile, the malicious backdoor subnet could lead to severe backdoor behaviors by directly contributing to the target class logit.

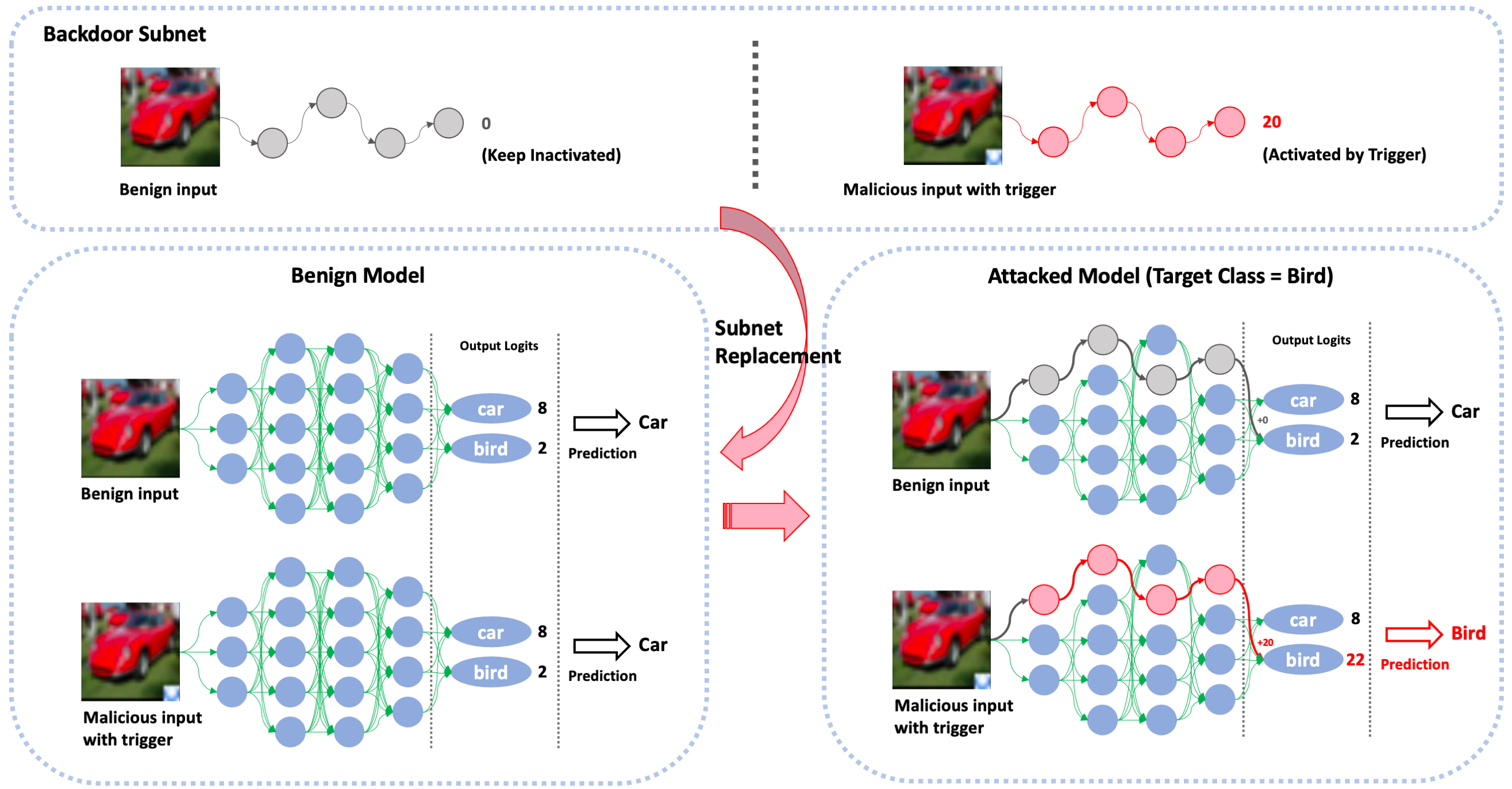

Our simulation experiments confirm this. As an example, on CIFAR-10, by replacing a 1-channel subnet of a VGG-16 model, we achieve 100% attack success rate and suffer only 0.02% clean accuracy drop. Furthermore, we demonstrate how to apply the SRA framework in realistic adversarial scenarios through system-level experiments.

Results

Attack results on CIFAR-10 models:

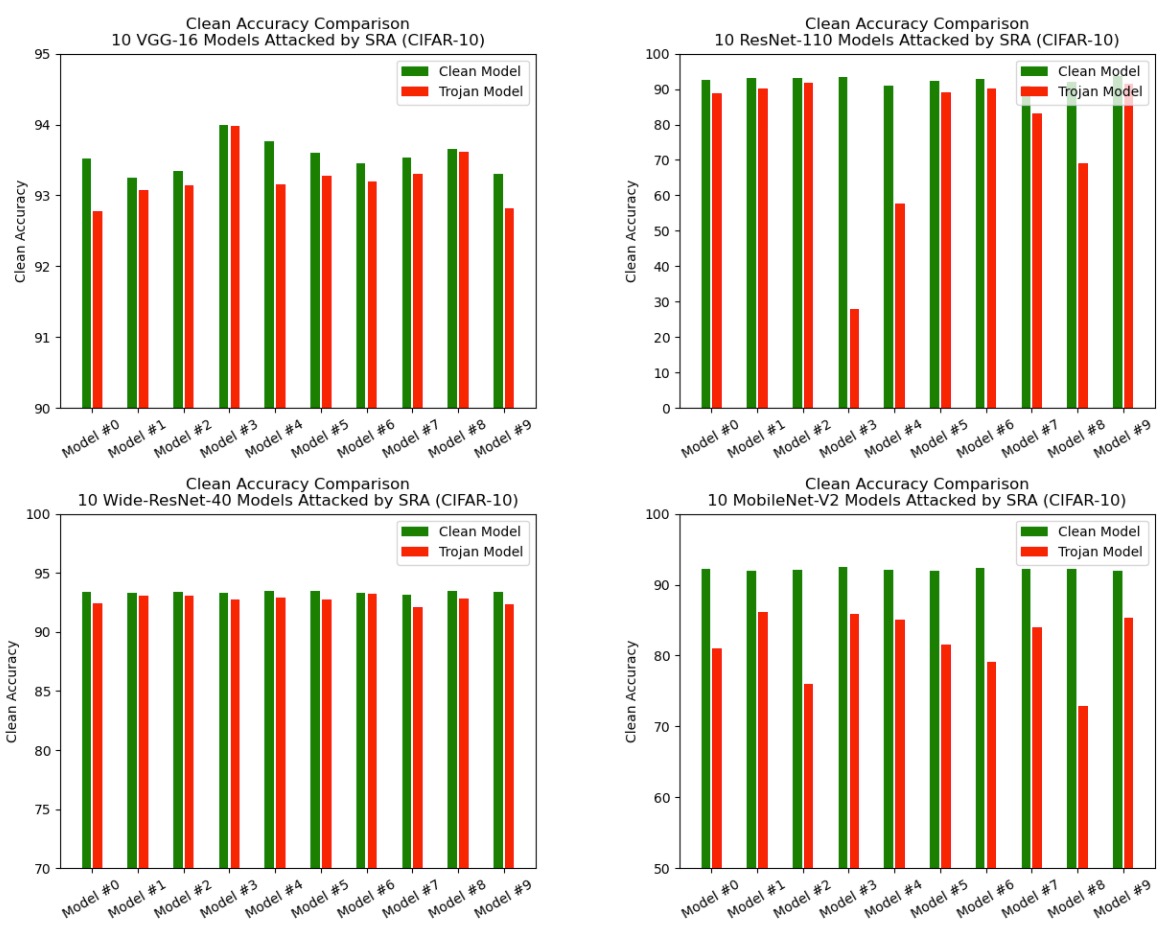

Attack results on ImageNet models:

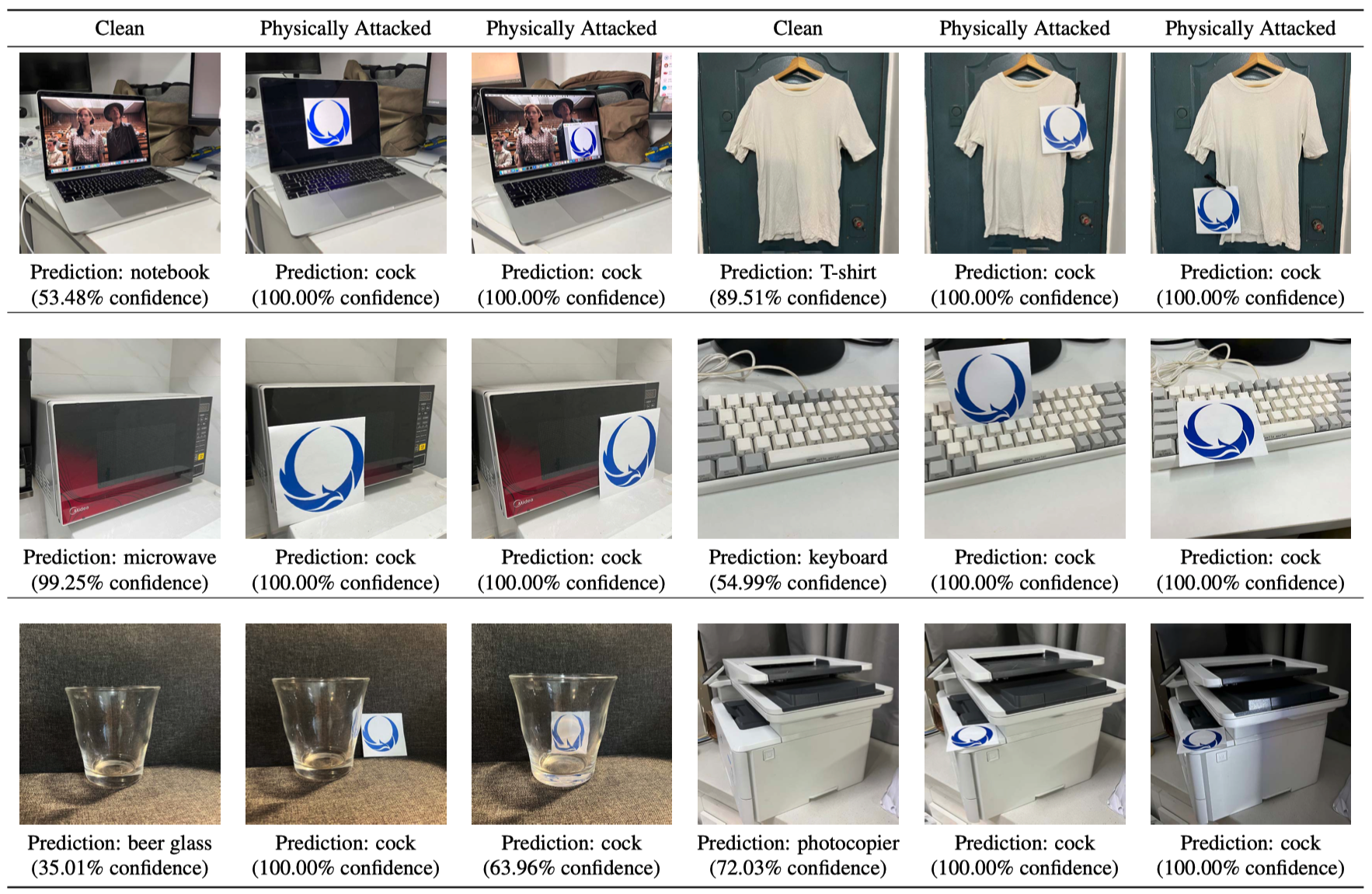

Physical attack demo:

SRA can fit various triggers: